Data ethics are essential for delivering responsible data science, machine learning, and AI projects. However, as a growing field, they’re hotly debated, and many – DataKind included – turn to experts to learn from their experiences. At DataKind, we understand that with overwhelming information, and a changing field, experts can lose sight of how communities view AI and how that, in turn, impacts their level of trust in the sector. How lucky we are to have data ethics experts in our own volunteer ranks!

At our Volunteer Summit this year, longtime DataKind friend and former volunteer, Cathy O’Neil, Ph.D., delivered the keynote address. As the author of Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy and founder of O’Neil Risk Consulting and Algorithmic Auditing (ORCAA), she shared lessons from her well-known book, and teased new insights from her forthcoming publication. Cathy also focused on practical examples that the DataKind community could relate to as we continue to build responsible AI for good.

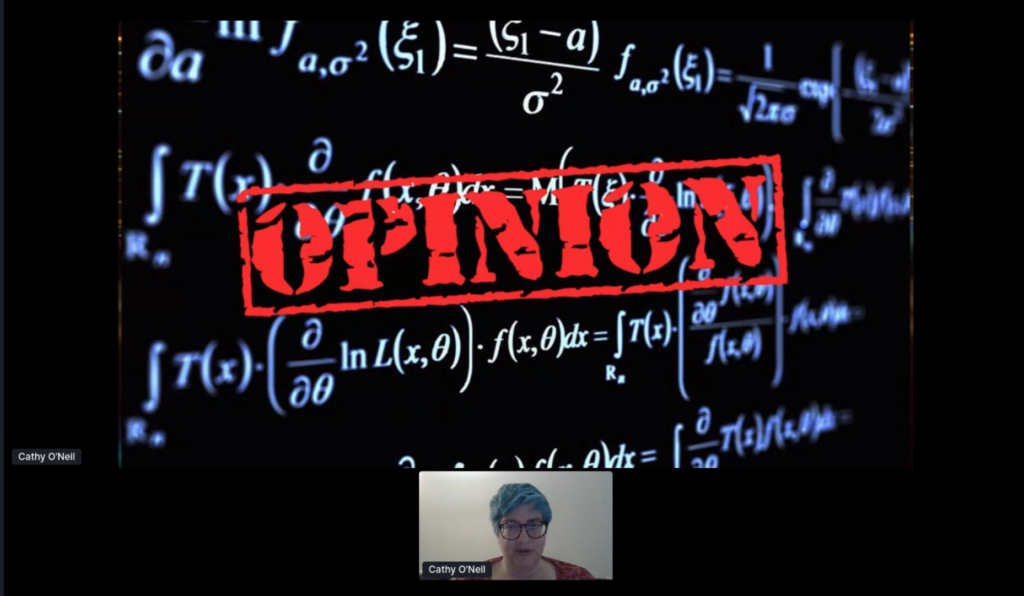

Kicking off the discussion, Cathy reminded us how incredibly intimidating terms like AI can be to people who aren’t familiar with them. Even hinting at this level of advanced mathematics can cause people to assume the concepts behind them are too difficult to understand, and more dangerously, that there’s no point in even trying. As a result, many of those who build the algorithms no longer feel they need to be able to explain them. That’s a big problem, according to Cathy.

“When people would ask questions about algorithms that were denying them jobs, or getting them fired, or denying them mortgages, they were consistently told, ‘It’s math, you won’t understand it,’” Cathy recounted during her keynote speech. “If you can’t explain it to me, it’s not my fault, it’s your fault. If there is a right being tread upon, it’s not a question of whether I can understand it, it’s a question of what my rights are.” Moreover, she urges us to remember algorithms are not objective. They’re embedded with the opinions and agendas of those who create them. The models are trained to predict “success,” but who decides what success looks like? “We often forget to think through who is in power here and what is the agenda they’re burying inside their code,” she adds.

A large part of the problem is public trust in anything with mathematical nuance. For example, a teacher was fired based on a “teacher efficacy” scoring system. When she tried to appeal her termination, she was told the decision was made by an algorithm, so it had to be fair. Not only can people lose their jobs based on an algorithm, but they may be blocked from the hiring process in the first place and never even know it. The stakes only get higher from there, like policing with the assistance of black box algorithms, meaning models where users, and in some cases designers, have no visibility into how outcomes are generated from the input data. Cathy made the case that we don’t actually have “crime data,” we only have “arrest data.” When we send cops to “hot spots” based on where they’ve made the most arrests in the past, we’re just reinforcing the over-policing of certain neighborhoods and calling it science. “It’s a propagation of an unfair racist system where we add this sort of scientism and trust and blind faith – and it just prolongs it,” said Cathy.

So, what can we do? At DataKind, we have an opportunity to set an example for the rest of the industry of what it means to design and implement algorithms to be consistent with our values. In addition to striving for explainable AI, we can take a hard look at the predictive models we develop and ask ourselves “for whom does this fail?” Finally, we can demand stronger, better regulation with more severe consequences for those who infringe upon human rights, placing the burden of explainability firmly on those who develop the algorithms. “We don’t have to let algorithms bypass anti-discrimination laws,” adds Cathy. “Algorithmic harm is not defined by the companies making money. Algorithmic harm is defined by the people getting screwed — that’s the step we don’t have yet in invisible systems.”

We’re so fortunate to have had the opportunity to hear from Cathy at the Volunteer Summit, and we want to thank her for inspiring DataKinders around the globe. DataKind’s work is achieved through our network of 20,000+ community members worldwide, and we appreciate all our presenters and volunteers who contributed to this year’s event, sharing data science learnings and best practices and advancing the Data Science and AI for Good movement.

Join us in advancing the use of data science to support causes that can help make the world a better place.

- Interested in supporting our work? Donate here.

- Interested in sponsoring a project? Partner with us.

- Interested in volunteering with DataKind? Look no further.

- Interested in submitting a project? Go for it!

Quick Links

- Gathering Our Global Community: Volunteer Summit 2021

- DataKind UK: The Practitioner’s Guide to Data Ethics is now live!

- Open Data & Ethics: A Case Study from DataKind & New America’s Future of Land and Housing Program

- Celebrating Nine Years of DataKind

- Why Data For Good? Findings from our Volunteer Survey

- Celebrating Our Volunteers During National Volunteer Week & Global Volunteer Month

- Powering Public Data for Communities: DataKind Hosts Virtual DataDive Event

- DataKind’s Three Hopes for AI in 2020